At the 2015 DARPA Robotics Trial we spoke with Team IHMC, who ended the robot challenge in second place, one of only three teams to complete all eight challenges. The team used the Atlas robot, designed by Boston Dynamics (now owned by Google), one of seven teams to do so. That Team IHMC was the only Atlas team to place in the DARPA Robotics Challenge Top Five is a testament to its innovative walking algorithm. As Team IHMC mechanical engineer Jesper Smith told us, “Atlas comes with a very basic walking algorithm and some teams use it. It’s pretty capable actually, but we think we’re better at walking, so we made our own walking algorithm.” In the process of sharing with iDigitalTimes what it took to succeed at the DARPA Robotics Challenge, Smith described a bizarre sense that’s not only essential for robots, but humans as well.

Congratulations to Teams KAIST, IHMC Robotics and Tartan Rescue, the winners of the DARPA Robotics Challenge! You made history. #DARPADRC

- DARPA (@DARPA) June 7, 2015Beyond the traditional five senses, humans come loaded with other methods of evaluating their surroundings and themselves. These additional senses include pain, balance, thermoception (the ability to feel temperature differences), and a capacity known as proprioception.

Since Charles Bell added a sixth sense—what he called muscle sense—to the human repertoire in 1826, proprioception has become understood to a degree allowing for sense duplication in a mechanical and digital body type, the robot.

“What you call proprioception in a human, if you close your eye you know where your foot is. If your foot is off by a centimeter or two, then you're going to fall.” Smith said. “Same for the robot.”

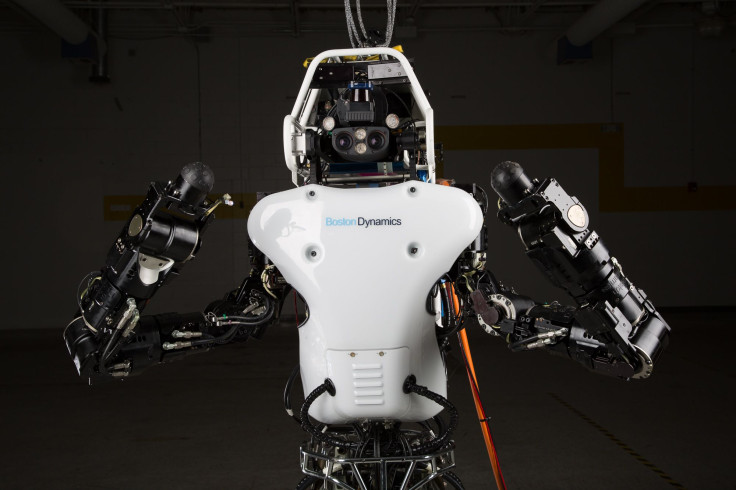

Team IHMC Robot at the DARPA Robotics Challenge

Human proprioception provided by a combination of neurons in the inner ear and stretch receptors inside of skeletal striated muscle (the kind of muscle you can flex voluntarily) that feed joint position and tension data to the cerebellum (and to the cerebrum via slightly different mechanisms). But what matters is that proprioception is what allows us to move with awareness of what we are moving and where we are moving it. It is the sensation of our own body.

So how do you duplicate that in a robot? Mostly by measuring as much as possible. This has lead to a wealth of sensors, including joint encoders, inertial measurement units, body attitude sensors, ground contact sensors, pronking controllers, GPS, shaft encoders, inclinometers, and angular rate gyros.

Since there is no single standard for robotics, with no one method to intake proprioceptive data, the real trick becomes uniting information from multiple sensors into a form that’s useful both to the robot brain (as it makes automatic weight adjustments to counteract force and movement) and human operators who need the best possible information.

IHMC to top of stairs! #DARPADRC pic.twitter.com/expZVrKJrJ

- SVRobotics (@svrobo) June 6, 2015When we spoke with Team IMHC they were busy recalibrating their Atlas robots proprioceptive measurements after two nasty falls in their first run at the challenge course:

DARPA Robotics Challenge Robot Falls

“So we fell a few times during our run and we broke some parts of the robots. We are recalibrating to zero. We have to shake it out real well, because after recalibration, we're kind of wondering ‘are we still walking correctly?’” Smith said.

The process of recalibrating a robot’s proprioception may sound more like adjusting gun sights than tweaking a sophisticated sense of self, but it’s how the data is processed that really matters.

Robotic Force Control

“We’re force control, we don’t care how the foot is oriented,” Smith said. With force control the power applied is taken into account, allowing a robot to adjust to changing real-world conditions like sand. Rather than instructing a robot to simply move to a real-world position, which could lead to a tumble should the ground shift, force control movement will adjust to conditions, moving until the necessary external force for stability is achieved.

New research has added not only better sensors, but better ways to use the information gained. More and more of proprioception is becoming autonomous, as robots make micro-adjustments to stay balanced, grasp objects, and know where it’s positioned in relation to its environment.

Our recap of the @DARPA Robotics Challenge & where #robotics is today. http://t.co/jZiiFJQPaH pic.twitter.com/ZnsgZF4HdK

- Singularity Hub (@singularityhub) June 11, 2015Robots Need Us In Their Infancy

Still, the larger decisions are made by humans. The relationship can also be thought of in the same way as we see our own body’s movements, with the robot controlling the unconscious mechanisms of stability and fine details, while the conscious human decides how to steer that self-composure.

“We spent a lot of time to make the interface, make it very clear what the robot is going to do,” Smith said. “There is a lot of autonomy, but we like to have the human in the loop at all times. Never have the robot do something surprising. Everything the robot does should be obvious and clear and transparent to the user.”

For humans proprioception is an essential part of our self-identity, its continuity allowing us the persistence of presence in our own bodies. But for now robot proprioception remains more about us than them, their proprioception essentially outsourced to conscious operators. “Have the robot do what it's good at—balancing—have the human do what its good at—recognition.”